“As code velocity increases, code review becomes the bottleneck. Teams risk bad code reaching production, creating security issues or new bugs. The solution is AI code reviews.

”

AI code review tools like Greptile act as a line of defense against bugs in production. Read on to learn how AI code review works, and why top teams use it to maintain high-quality standards.

What is AI Code Review?

AI code reviews improve the traditional pull request (PR) process by automatically analyzing changes, identifying potential bugs or security issues, and providing context-aware feedback before human reviewers step in.

In most modern software teams, engineers review each other's code as a PR before merging into the main codebase, ensuring changes meet standards and don't introduce problems. This peer review process helps catch bugs, maintain consistent practices, and preserve overall code quality.

As velocity rises, engineers are more likely to miss nuances and PRs stack up. That slows merges and can introduce bugs when teams cut corners to clear the bottleneck.

AI code review improves this by evaluating changes the moment a PR opens. Benefits go beyond obvious mistakes:

- Catch bugs humans miss. Identify logic errors, syntax issues, and regressions before code reaches production.

- Improve security. Detect vulnerabilities (e.g., SQL injection, SSRF, unsafe input handling) as soon as they appear.

- Enforce consistency. Maintain team-wide standards without relying on memory or attention.

- Speed up delivery. Reduce back-and-forth so changes merge faster.

Unlike simple linters or static analysis tools, AI reviewers analyze code in context, provide clear, human-readable comments, and generate concise PR summaries. Tools like Greptile also learn over time, taking in manual feedback and enforcing custom rule sets the team uploads.

How Do AI Code Reviews Work?

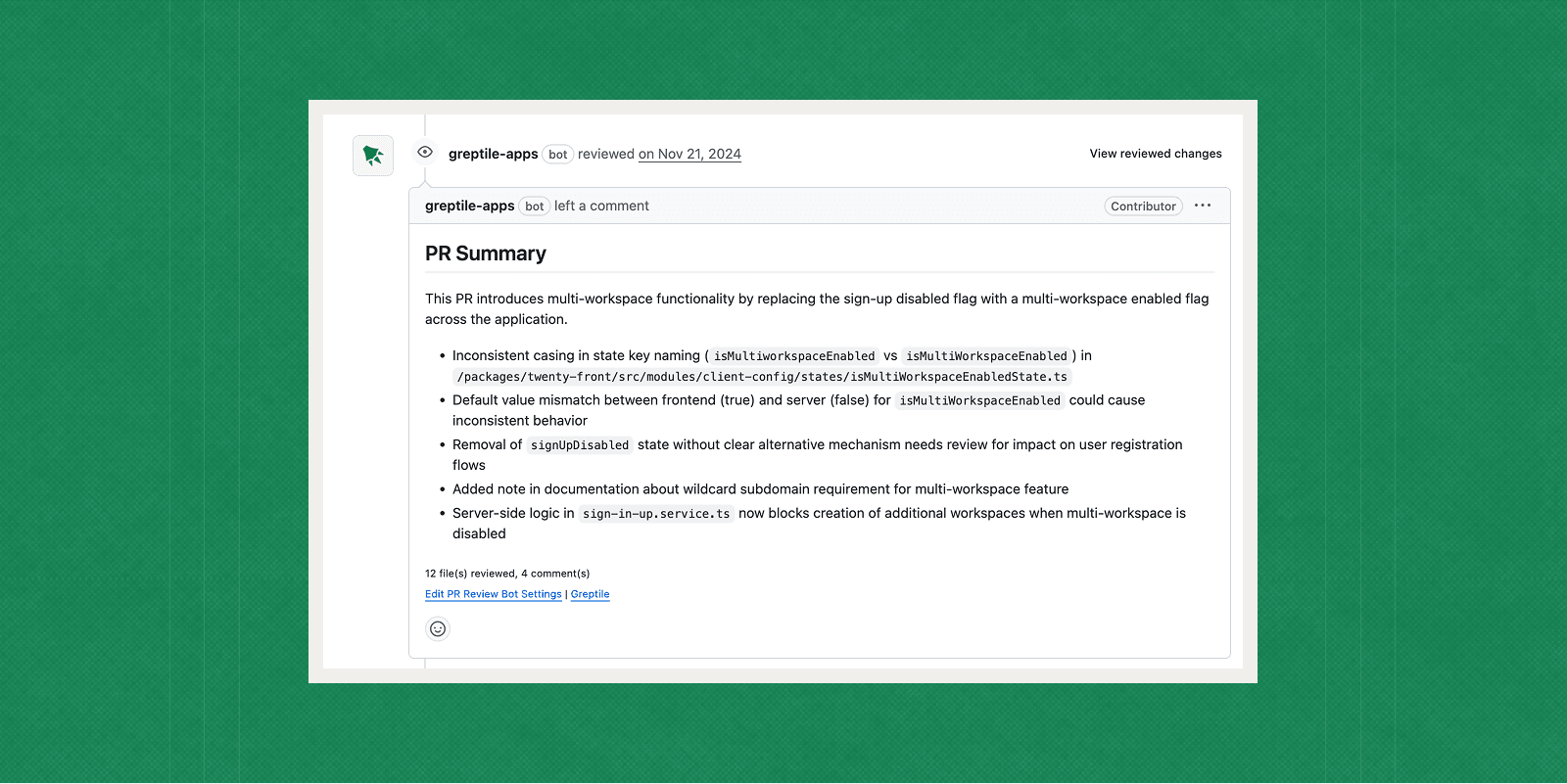

The feedback left by AI code reviewers lives directly in platforms like GitHub and GitLab, so they integrate into existing workflows. A Greptile AI pull request review includes:

- PR summaries: An overview of what the pull request changes.

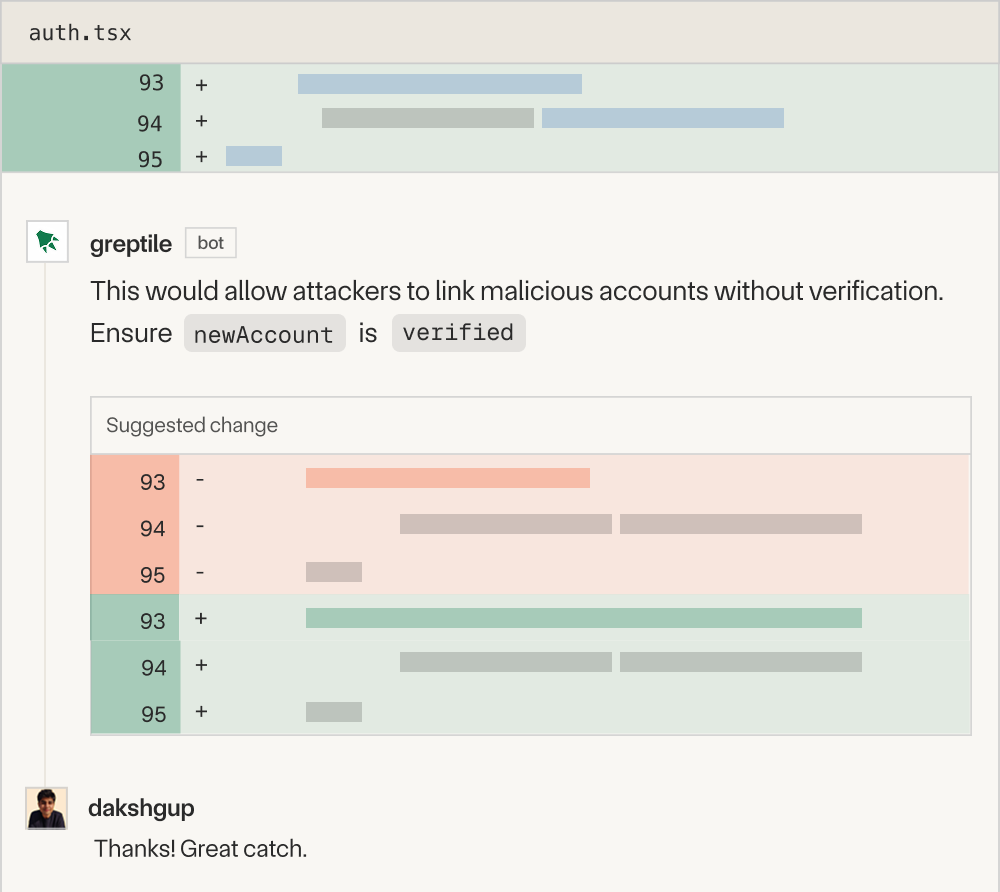

- Inline comments: Precise, line-level suggestions tied to the diff for fast fixes.

- Sequence diagrams: Auto-generated call flows so reviewers see "who calls what, in what order" at a glance.

- Context-aware suggestions: Analyze related files, APIs, configs, tests, docs, and history to understand intent—not just the diff. Context is critical because it surfaces hidden bugs early and keeps team standards consistent.

- Beyond-the-diff checks with iterative passes: Flag cross-layer issues (frontend/backend defaults, auth, env, deployment prerequisites), rescan after updates, and deliver an impact-ranked summary.

Greptile also lets developers tag the AI in a comment to ask follow-up questions or request clarification. By automating early review steps, AI reduces time to merge and lets human reviewers focus on higher-level feedback.

AI Code Review Example

Here's an example of code review in action →

This large PR added multi-workspace support with subdomain management and lets teams pick auth providers per workspace. It touched frontend state, GraphQL, server env and docs, and auth flows.

Greptile reviewed it twice as the scope evolved. The first pass surfaced integration risks that would cause subtle breakage; the second pass rescanned the expanded diff and summarized new cross-layer changes so reviewers could focus on what mattered most.

First pass, it caught:

- A naming inconsistency in the flag (isMultiworkspaceEnabled vs isMultiWorkspaceEnabled) that would break lookups across files. The project followed with a fix commit to align the casing.

- A default mismatch: the frontend treated the flag as true while the server defaulted to false, which would gate features inconsistently. A human reviewer echoed this, validating the risk.

- Removal of the prior signUpDisabled state without a clear replacement, putting registration paths at risk.

- An operational prerequisite buried in docs: wildcard subdomains are required to run multi-workspace.

- Confirmation that server logic blocks extra workspace creation when the feature is off, so gating happens beyond the UI.

After fixes and a broader update, Greptile ran a second pass and flagged:

- Subdomain support wired into the workspace entity with uniqueness and auth-provider flags, and urged careful testing of subdomain behavior.

- A new DomainManagerService that centralizes URL and subdomain handling across the app.

- An auth flow refactor so the frontend respects workspace-specific providers and subdomain-based routing, plus server-side validation utilities.

- Env config split from a single FRONT_BASE_URL to FRONT_DOMAIN / FRONT_PROTOCOL / FRONT_PORT to improve deployability.

- Concrete breakage risks: removing the JWT generation mutation without a clear replacement and a subdomain query that lacked a parameter—both could fail at runtime.

Why did this matter, and why did Greptile surface it? These are cross-layer mismatches and flow regressions that linters and type checks rarely catch—and that humans struggle to spot quickly in a 160-plus-file PR. Greptile connected UI flags, server defaults, GraphQL, and docs in one review, then re-summarized after major changes so issues didn't slip through before merge.

Bottom line: Greptile prevented silent user-facing bugs and deployment foot-guns by doing end-to-end reasoning over a large, fast-moving PR.

A Buyer's Guide to AI Code Review

With the basics covered, the next step is choosing how to evaluate an AI code review tool. Buyers weigh build vs. buy, the features that matter, and, ultimately, how a tool catches more bugs while fitting your workflow.

Keep Generation and Review Separate

Teams often ask if one product can both generate code and review it. It shouldn't. Creation and evaluation are different jobs, and the reviewer works best when it is independent.

Independence guards against two risks: shared assumptions that miss the same issues, and incentives that make a reviewer go easier on its own agent's output. An independent reviewer gives you a clean check before merge.

At today's velocity, more code is agent‑written and never read pre‑commit. Agents fail in novel ways: invented APIs, stray imports, hard‑coded values, and edits outside the intended files. The reliable place to catch that is the pull request.

A review‑only tool should run on every PR, look beyond the diff to related files and configs, rescan after updates, and rank issues by impact. It should learn your rules, surface security and integration risks early, and not write code.

Benefits & Limitations of a Dedicated AI Code Review Tool

In practice, here's what AI code review delivers today—and the limits you should plan around.

Benefits (what you should actually expect):

- Lower time to merge. Early, automated comments cut back-and-forth and let human reviewers focus on design decisions, not nits. Greptile speeds up merge time from ~20 hours to 1.8 hours.

- Fewer production bugs. Good systems catch cross-layer issues (defaults, auth, config, env) that slip past linters and type checks.

- Consistent standards. Enforces naming, patterns, and team rules, and continuously learns over time.

- Security surfaced earlier. Flags obvious injection/unsafe handling in the PR, not in a post-deploy scan.

- Better reviewer focus. PR summaries and impact-ranked findings help triage large diffs fast. (Greptile also adds sequence diagrams for "who calls what, in what order.")

Limitations (plan around these):

- Noise exists. False positives and overbroad suggestions happen; Greptile includes severity thresholds and other settings so you can manage the signal-to-noise ratio.

- Coverage and context are uneven. Most tools offer generic suggestions. Greptile indexes your entire codebase, so feedback is tailored to your code.

- Stochasticity. Models are sampling-based, so the same PR can get different comments and one pass can miss edge cases or cross-file bugs. Greptile counters this by grounding reviews in a full repository index for steadier, repeatable results.

- Self-hosting / security. Many tools can't be self-hosted, so code and secrets leave your network. Greptile supports self-hosting in your VPC, keeping data local and under your keys.

Conclusion

As shipping speed climbs, the real win is keeping quality high without slowing teams down. AI code review turns PRs into fast, high-signal checkpoints so you can merge with confidence at your pace. Try Greptile today.